Clown World has reached the point that humanity is diverging into subspecies. The one type believes that what you do reflects your character; the other type believes only in a full stomach.

h ttps://voxday.net/2023/02/23/overwhelmed-by-ai/

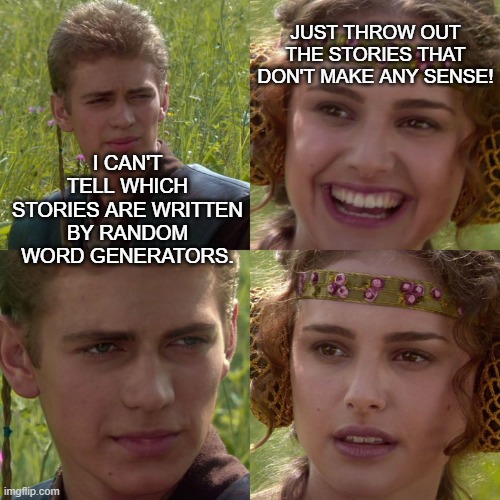

Live by the sword, die by the sword. Clarkesworld science fiction magazine is forced to close to submissions by a flood of ChatGTP entries.

I don’t know why this strikes me as particularly funny, but it does. Especially because it won’t be long before SFWA has its first fake member, given the way the most successful clout-chasing con artists think. Wracking up three professional publications wouldn’t be hard, and I don’t think the ever-so-inclusive organization has any specific requirement that its members be human or actually exist.

It has to be admitted. For all its evil and awfulness, Clown World can be amusing sometimes.

I was confused rather than amused.

Come on, now, how hard is it to… wait… people are using AI to do their ENTIRE jobs? And the people reviewing those literary products of incapable-of-creativity chatbots cannot tell the difference?

We aren’t talking about AIs that pass the Turing test. We’re talking about humans who have FAILED the Turing test for YEARS! How long has this been going on? How many people are trying to use AIchat whatevers to cheat?

[GunnerQ googles ‘AI plagiarism’]

I had no idea!

Mississippi research helping to stop AI plagiarism

h ttps://www.magnoliastatelive.com/2023/02/23/mississippi-research-helping-to-stop-ai-plagiarism/

Since the launch of ChatGPT in November, the online tool has gained a record-breaking 100 million active users. Its technology, which automatically generates text for its users based on prompts, is highly sophisticated. But are there ethical concerns? A University of Mississippi professor has co-authored a paper, led by collaborators at Penn State University, showing that artificial intelligence-driven language models, possibly including ChatGPT, are guilty of plagiarism . in more ways than one. .My co-authors and I started to think, if people use this technology to write essays, grant proposals, patent applications, we need to care about possibilities for plagiarism,. said Thai Le, assistant professor of computer and information science in the School of Engineering. .We decided to investigate whether these models display plagiarism behaviors..

You just witnessed academic integrity pass negative-infinity. The steaming mounds of LIES being pumped out of America’s education system were just revealed to be so corrupt, so void of meaning, so MEANINGLESS, that a random word generator is creating a crisis of scholarship.

Ditto the science fiction community.

Ditto the grant-funding system of… eh, that was always money laundering. Submit ten thousand words in your application, to disguise the fact that the only two words that matter are who’s your globalist daddy.

And I’ve long held doubts about instruction manuals for small consumer goods.

A college student created an app that can tell whether AI wrote an essay

h ttps://www.npr.org/2023/01/09/1147549845/gptzero-ai-chatgpt-edward-tian-plagiarism

By Emma Bowman, 9 January 2023

See? It’s not hard to detect a fake. At least, it shouldn’t be.

Edward Tian, a 22-year-old senior at Princeton University, has built an app to detect whether text is written by ChatGPT, the viral chatbot that’s sparked fears over its potential for unethical uses in academia.

ChatGPT does not understand the concept of ethics… but it can write an essay on the topic for you?

Edward Tian, a 22-year-old computer science student at Princeton, created an app that detects essays written by the impressive AI-powered language model known as ChatGPT.

Tian, a computer science major who is minoring in journalism, spent part of his winter break creating GPTZero, which he said can “quickly and efficiently” decipher whether a human or ChatGPT authored an essay.

Either this was easy, or Tian is a genius. No sarcasm this time!

His motivation to create the bot was to fight what he sees as an increase in AI plagiarism. Since the release of ChatGPT in late November, there have been reports of students using the breakthrough language model to pass off AI-written assignments as their own.

“there’s so much chatgpt hype going around. is this and that written by AI? we as humans deserve to know!” Tian wrote in a tweet introducing GPTZero.

That’s speciesist!

Tian said many teachers have reached out to him after he released his bot online on Jan. 2, telling him about the positive results they’ve seen from testing it.

I already knew that academia was trash… but this is just sad, man. Teachers don’t know how to combat plagiarism? Why are they only now starting to care?

And to think, I paid attention and tried my best in college.

The college senior isn’t alone in the race to rein in AI plagiarism and forgery. OpenAI, the developer of ChatGPT, has signaled a commitment to preventing AI plagiarism and other nefarious applications. Last month, Scott Aaronson, a researcher currently focusing on AI safety at OpenAI, revealed that the company has been working on a way to “watermark” GPT-generated text with an “unnoticeable secret signal” to identify its source.

The problem here is post-modernism. The idea that truth doesn’t matter. Was that story written by a human or robot? Does it matter? Can you even tell without a tool?

The answer is yes. Truth does matter. It can be detected and verified. But that is an exclusively Christian statement, not a scientific statement. As we’ve seen from the experiment-reproduction problems that !science! has been struggling against.

I hate this world. The “artist” doesn’t care if his paint squiggles qualify as art, so long as he makes the sale, and the people looking at his art don’t care either. Everything is so fake and gay, a science fiction magazine doesn’t know if the stories submitted to them even have a plot. Credit to Clarkesworld for at least caring that they can’t tell.

The reason AI is being used to cheat and make forgeries is not because it became available. It’s because cheating and forgery have become an accepted part of American culture. Students are learning what they’re being taught, to wit, postmodernism and faggotry. Everything is fake and gay so just check the boxes and goof off.

I’ve wondered why AI has been extremely popular among socialist types even before it existed, and now I have the answer. If nothing matters… and you can make someone else do your work… that’s as good as life gets.

This is the difference between being a creator and a consumer. Christ wants us to be active participants in our lives, becoming what we do, living for purpose and accomplishments of varying types, whereas for the atheist, the entirety of life is selecting the least painful path into oblivion.

That is also why, eventually, the WEF “get into the pod and eat the bugs” people will succeed. Because when the time comes, it’ll be the path of least resistance.

I did a bit of interacting with ChatGPT, and the first thing I asked it was for the most common winning lottery numbers. It told me it was .unethical. True story.

Risky source. Had me on pinnails.

The goal is to confuse. Where confusion is not possible, exhaustion is an acceptable alternative.

It is the reason the tactic of overwhelming, never-ceasing lies are peddled. The lies can be contradictory, it does not matter. Those who attempt to argue based on merit will never find the thread and convince others, as one is quickly buried under new lies, and relevance is lost.

AI is coveted because it allows both nonstop and lies. Engaging ties up true human resources fighting against 0s and 1s.

The solution, as always, is to not play. Identifying trolls online is the skillset everyone has had the opportunity to hone since 2000.